It is widely believed that a distributed cache is a simple way to make everything faster. But if my past experience is any guide, cache misuse can make things even worse.

It is widely believed that a distributed cache is a simple way to make everything faster. But if my past experience is any guide, cache misuse can make things even worse.

Let’s examine a few potential scenarios under which a poorly implemented cache could hurt, not help, performance.

Scenario one:

Issue: My database lags, and with your cache it slows down even

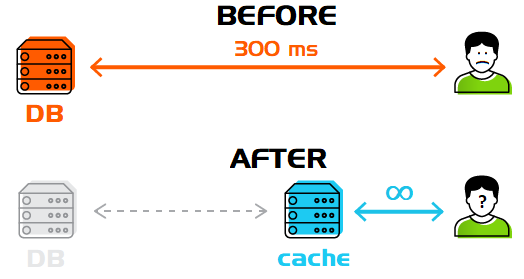

How does a typical web service look like?

Servlet ⟷ Database

Q: What shall I do when the database fails to cope with the requirements?

A: Right! Buy new hardware!

Q: When this doesn’t help?

A: OK, in that case setup a cache, preferably a distributed one!

Servlet ⟷ Cache ⟷ Database

Will it become faster? Maybe!

Why only “maybe?” Let’s suppose you achieved an incredible level of parallelism and can accept thousands of queries simultaneously. But everything comes via the same key: a hot offer on a landing page. Furthermore, all threads follow the same logic: «no cached value, let's look up it in the database».

What happens? In this case, every thread will visit the database and update the cache value. Hence, the system will spend even more time than without cache at all. Such a problem is easily solved – synchronize the incoming queries with the same keys.

Apache® Ignite™ offers a simple caching mechanism (Spring Caching) that supports synchronization.

@Cacheable("dynamicCache")

public String cacheable(Integer key) {

// Every thread will do:(

return longOp(key);

}

@Cacheable(value = "dynamicCache", sync = true)

public String cacheableSync(Integer key) {

// only one thread will do the job per one key

// All the other threads with same key

// will await the result from the first one, instead of executing the code longOp(key)

return longOp(key);

}

Synchronization functionality was added to Apache Ignite in the release 2.1.

If the result is this: “It’s faster but is still way to slow.”

Don’t panic. This scenario is usually an extension of the first one and should show that cache developers also can misuse caches. So, the fix, adding synchronization across caches, came into production and… didn’t help this time.

In this case, let’s say all 1,000 threads – again, serving the hot offer from the homepage, are headed to different keys simultaneously. When that happened, the synchronization mechanism became a bottleneck.

Data on all the synchronization features prior was stored in the cache ignite-sys-cache, on the key DATA_STRUCTURES_KEY as Map<String, DataStructureInfo> and every time we added a synchronizer as follows:

// Take global lock on the key

lock(cache, DATA_STRUCTURES_KEY);

// Add new synchronizer

Map<String, DataStructureInfo> map = cache.get(DATA_STRUCTURES_KEY);

map.put("Lock785", dsInfo);

cache.put(DATA_STRUCTURES_KEY, map);

// Release the lock

unlock(cache, DATA_STRUCTURES_KEY)

So, when creating necessary synchronizers, all threads tried to modify the value on the same key.

Fast again! But how robust?

Apache Ignite got rid from the «most important» key in the release 2.1 and began to store synchronizer metadata separately. In real cases, users got more than a 9,000% performance boost.

And everything went well, until the lights flickered, UPS failed and users lost a warmed-up cache.

In Apache Ignite, release 2.1 and later, there is a custom Persistence implementation.

Though, Persistence as such guarantees no consistent state by restart, as the data are synchronized (dumped to disk) periodically, but not in real-time mode. Persistence is primarily needed to store and efficiently process on a single computer more data, than can fit into its memory.

Consistency is guaranteed with the Write-ahead logging approach. Simply put, the data first are written to disk as a logical operation, and only then this data will be stored in a distributed cache.

When the server restarts, we’ll have on the disk some state, actual for a certain moment in time. It suffices to add WAL to this state, and the system again becomes healthy and consistent (fully ACID). The failover completes within seconds, minutes at worst, but not hours and days, as if we would warm-up the cache with actual queries.

It’s robust! But how fast?

Persistence slows the system down (by writes, not reads). Enabled WAL slows the system down even more, but we need this tradeoff to ensure robustness.

There are several logging modes, with different guarantees:

— DEFAULT — complete data persistence guarantees by any loads

— LOG_ONLY — complete guarantees for all cases, except for operating system failure

— BACKGROUND — no guarantees, but Apache Ignite will make it's best :)

— NONE — no operation logging.

The speed difference between DEFAULT and NONE on an average-weighted system reaches 10-fold.

Back to our case. Let’s suppose we have chosen the BACKGROUND mode, which is 3 times slower than NONE, and we do no longer bother about losing the warmed-up cache (we can lose the operations from the last several minutes preceding the crash at worst).

We have been worked in such mode for several months, have gone through much, and we easily restored the system after crashes. So far, so good.

But, on 20th of December, at our website’s sales peak, we realized that the servers already experience 80% load and were about to crash.

Having disabled WAL (switching to NONE) we could decrease the load thrice, but to that end we needed to restart the whole Apache Ignite cluster and put up with the fact that we couldn’t restore such cluster if something were to go wrong. Do we return to the option «fast and insecure»?

In Apache Ignite 2.4 and later, it's possible to disable WAL without cluster restart and then enable it again, simultaneously restoring all of the guarantees.

SQL

// Disable

ALTER TABLE tableName NOLOGGING

// Enable

ALTER TABLE tableName LOGGING

Java

// Current state

ignite.cluster().isWalEnabled(cacheName);

// Disable

ignite.cluster().disableWal(cacheName);

// Enable

ignite.cluster().enableWal(cacheName);

Now, if we need to disable logging, we can temporarily shut off WAL and enable it again in any appropriate moment. That said, after enabling WAL, the system ensures behavior according to the chosen WAL mode.

Cache, even a distributed one, isn’t a silver bullet!

No technology, no matter how fancy and advanced, can solve all of your problems. Misused technology will likely only make things worse, and a correctly used one hardly closes all the gaps.

Cache, including a distributed one, is a mechanism that is useful only when well used and thoughtfully tuned. Bear this in mind before implementing it in your project: take benchmarks before and after every case related to it… and let high performance be with you!